[This blog appeared on The 2:10 Leader Blog earlier this year. I am importing this year’s blogs as I transition them to MarcFey.com]

Gmail and Google+ went down yesterday, and in a weird coincidence, Google users searching on the term “Gmail” experienced a bizarre glitch, inadvertently sending emails to a guy named Dave from Fresno (read these Tech Crunch articles if you’re interested in the details: http://tcrn.ch/1dY6vMW).

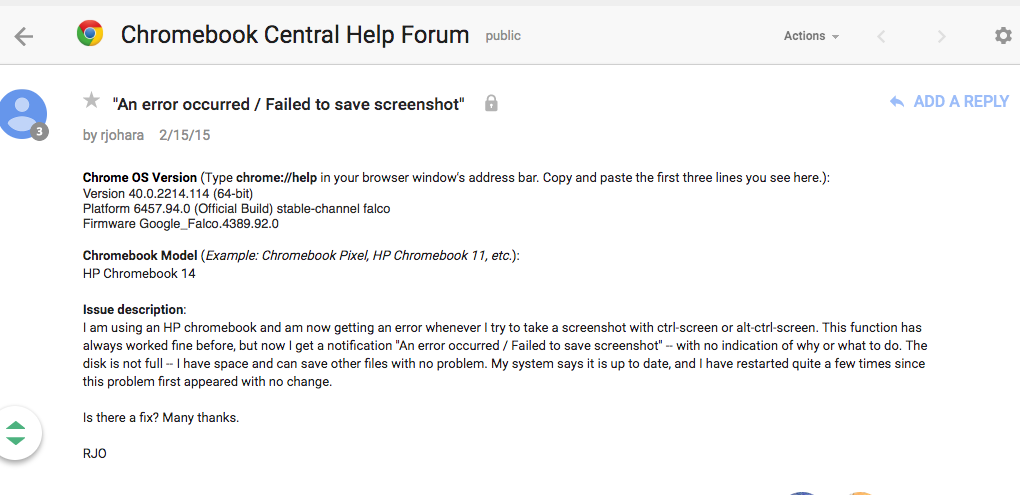

“Temporary Error 500. We’re sorry, but your Gmail account is temporarily unavailable.”

Wow. Really? Gmail? …I mean, Google?

The idea of Google experiencing this level of failure is sobering. If you have any responsibilities at work–an at all– or are in a service role, here are 5 things Google’s colossal technical failures can teach us about work and failures and the challenge of supporting customers, especially if you work in the unpredictable world of technology (as I do):

1. The colossal failure WILL happen, and likely it will be at the WORST time possible. If Google can experience this level of technical failure, you and I can forget about getting it right every time. Google made $51B last year (http://bit.ly/KVu8sy), so resources wasn’t an issue. This is a hard reality, because the people we serve in our organizations don’t typically have a grid for “count on it, failure will happen.” Well, let me say, they might, until it happens to them.

What can we do? Socialize the expectation that failure WILL happen. Mistakes will be made. Technology, processes, people..lots of things will let us down. But also communicate that when it does, “I will take full responsibility and will do everything possible to rectify the situation.”

2. Brace for the criticism (and the fact that there will be a lot of laughter at your expense). At the same time Gmail went down, engineers responsible for keeping Google alive just happened to be sitting down for a Q&A on reddit. Imagine that? In the words of Tech Crunch writer Greg Kumparak: “Heh. Worst.Timing. Ever.” He also went on to point out that this team of engineers are called the “Site Reliability Team” who are “responsible for the 24×7 operation of Google.com.” Funny, really funny stuff. Poking fun at Google+, one writer wrote, “The problem is currently affecting a huge number of users. Google+ is also down, although you’d be forgiven for not having noticed that sooner.”

What can we do? Not much. Except laugh. Remember not to take ourselves too seriously. Let the criticism shape the kind of leader you are: take responsibility, respond with patience and grace, and do everything we can to “make it right.”

3. Use this as an opportunity to reinforce and shape your company’s culture. Probably one of the most telling moments of the story was revealed during the Reddit (Reddit.com) interview. The engineers kept answering questions while the services were down (because you can bet Google employs more than 4 engineers). Here is the revealing exchange:

Reddit user notcaffeinefree asks: “Sooo…what’s it like there when a Google service goes down? How much freaking out is done?”

Google’s Dave O’Connor responds: “Very little freaking out actually, we have a well-oiled process for this that all services use— we use thoroughly documented incident management procedures, so people understand their role explicitly and can act very quickly. We also exercise this [sic] processes regularly as part of our DiRT testing. Running regular service-specific drills is a big part of making sure that once something goes wrong, we’re straight on it.” (http://tcrn.ch/1d2FQIK)

And that, in a nutshell, may be why Google banked $51B last year.